AI Agents Explained for Non-Techies

Explained Simply By a Fellow Business Person

I recently finished Google’s 5-day intensive Gen AI bootcamp in my free time.

As a business person diving into the tech side, I’d have to admit: the courses and assignments weren't easy! It was a challenging deep dive into complex topics.

While tackling white papers and coding, I looked for a better way to grasp how AI agents work. I found the best method was relating them to concepts I handle daily, like business operations. Thinking about agents through that business lens made things click.

So, what is an AI agent in simple terms?

Think of it like a highly focused employee. It’s designed to achieve specific goals autonomously. This could be anything from reserving conference rooms and booking flights to writing code or conducting research.

In this post, I'll share about AI agents from a business point of view. My hope is to make this powerful technology more understandable for everyone, not just coders. Let's explore how AI agents function much like members of a team.

LLM vs. AI Agent: Knowledge vs. Action

First of all, we have to distinguish Large Language Model (LLM) vs. AI agents. So what’s the difference? The key is action. An LLM holds massive foundational knowledge, like an employee with general education and skills.

An AI agent, however, takes that knowledge and acts to complete specific tasks. It's like an employee using company tools and data to actively book a flight, not just knowing about flights. The agent integrates knowledge with execution.

The Core Components: Anatomy of the AI Agent "Employee"

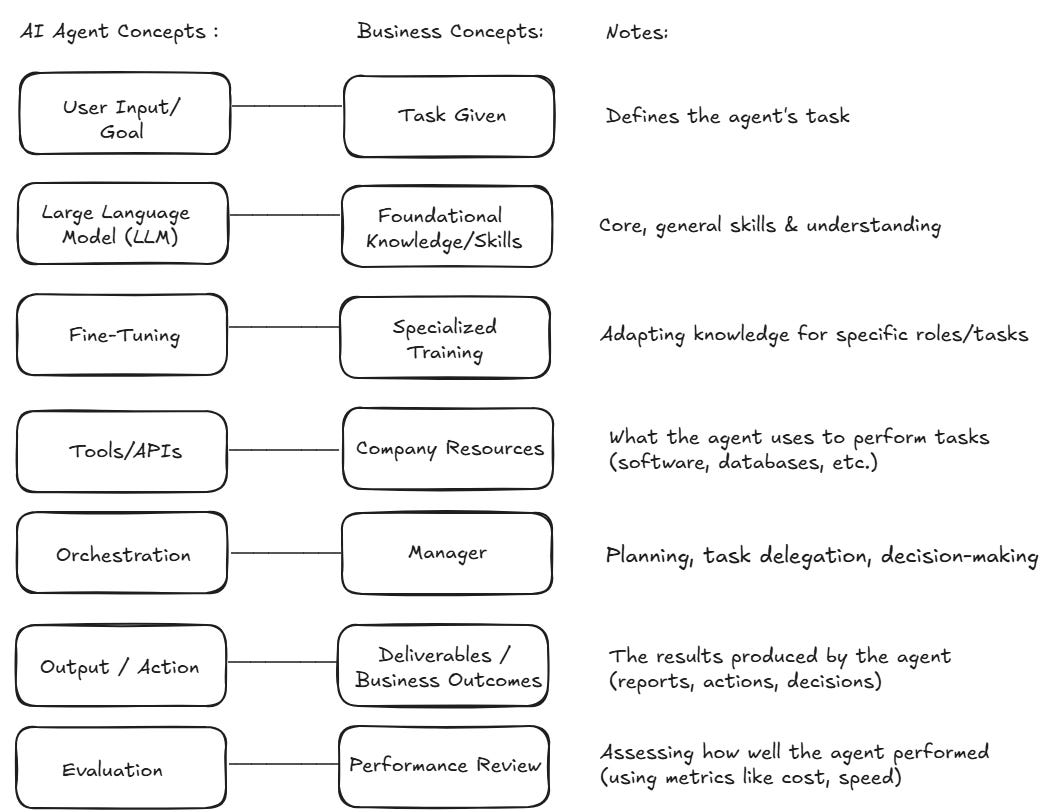

AI agents have several core components, much like an employee needs certain things to work effectively. While this is a simplification, comparing the concepts side-by-side helps clarify how agents work.

Just like an employee's workflow, an agent's process has several parts:

The Task → Inputs/Goals: First, an employee needs an assignment or goal. Similarly, an AI agent starts with a specific input or objective provided by a user or another system. This defines what the agent needs to accomplish.

Employee Foundational Knowledge/Skills → LLM: Next, consider the agent's core intelligence. This is powered by an LLM (like GPT-4, Google's Gemini models, or Anthropic's Claude 3 family). This core is like an employee's fundamental education – think high school, basic literacy, numeracy. It provides broad understanding and language skills learned from massive datasets.

Specialized Skills → Fine-tuning: Specialized skills are often needed for specific jobs. An employee might get a degree or vocational training. Similarly, LLMs undergo "fine-tuning." They learn from specific data (like company documents or industry jargon) to become experts in a particular area.

Company Resources → Tools: An employee also needs resources like a laptop, software, or specific tools. Likewise, AI agents need access to "tools." These are often APIs connecting them to software, databases, or the internet. These tools let the agent interact with the world and gather real-time information.

Tool Example - RAG: A common technique using tools is RAG (Retrieval-Augmented Generation). With RAG, the agent accesses external knowledge bases, like product catalogs or company documents. This helps it provide accurate, up-to-date answers and avoid making things up ("hallucination"). It's like a stylist checking inventory before recommending a specific dress.

Manager’s Direction → Orchestration Layer: Every team also needs direction. An agent's "Orchestration Layer" acts like its manager or team lead. This layer breaks down complex goals into smaller steps. It then plans these steps, makes decisions, and directs the agent's actions to ensure the task gets done correctly.

The Result → Outputs/Actions: Ultimately, an employee produces work or completes tasks. An AI agent delivers outputs – this could be an answer, a report, a booked flight, or code written.

How Do We Measure an AI Agent's Performance?

Evaluating an AI agent is much like an employee's performance review. Various metrics are been used to see if it's doing a great job. Key evaluation criteria include:

Business Outcome: Does the agent achieve the desired result (e.g., resolve customer issues, generate leads, complete accurate reports)? This is arguably the most important evaluation criteria

Latency: How quickly does it complete tasks or respond? (Think project deadlines or customer response times).

Cost: What resources does it use (computing power, API calls)? (Like tracking department budgets or the cost associated with employee time and tools).

Reliability: Does it perform correctly and avoid errors consistently? (Similar to employee dependability and quality standards).

Safety: Does it operate securely and follow rules (like data privacy)? (Akin to corporate governance and security protocols).

Fairness/Bias: Does it produce unbiased outputs and make fair decisions? (Similar to fair business practices and non-discrimination policies).

Explainability: Can we understand why it made a specific decision or took an action? (Comparable to transparent processes or audit trails).

Real-World Examples of AI Agents Today

AI agents are already active in various business functions:

Coding Assistants: Tools like GitHub Copilot and many others help developers write, debug, and refactor code more efficiently.

Customer Support Bots: Many companies use AI agents to handle FAQs and initial customer queries via chat, web, or phone, often blending seamlessly with human support if needed.

Travel Agents: AI can search itineraries, compare prices, and book flights or hotels based on user preferences.

Executive Assistants: Emerging AI tools can manage calendars, schedule meetings by reading emails, and draft messages.

Research Assistants: Agents integrated into search (like Perplexity AI or specialized modes in Gemini/ChatGPT) can browse the web, synthesize findings, and generate detailed reports.

What does this mean to our society? AI Agents and the Future of Work

Thinking about AI agents as these super-efficient "employees" naturally makes me ask bigger questions. AI agents are getting smarter and faster at a rate that's hard to keep up with. So, what does that actually mean for people's jobs down the line?

I hear a lot of discussions about AI is just helping us, augmenting our skills rather than replacing us. Sure, some of that is definitely happening. But seeing how fast AI is improving, I think it’s normal to worry about job displacement, at least in the the short term. We're already seeing impact in areas like customer service, content creation, and coding assistance.

However, it's crucial to remember that achieving perfect accuracy with AI agents is still a significant hurdle. The issue of hallucination, where AI generates incorrect or nonsensical information, means that moving from, say, 90% accuracy to 100% requires a massive amount of effort and refinement. This necessitates continued and significant role for human oversight and intervention in the foreseeable future, a "human-in-the-loop" approach (ex: handle edge/novel cases, verify data generated, correct hallucinations).

But still, there is the million-dollar question: how do we - the people - stay relevant? Getting serious about learning new skills, especially how to work with these AI tools, feels critical.

Perhaps it’s about focusing more on what makes us human: connecting with others, creativity, strategic thinking, handling complex social situations, appreciating rich in-person experiences like dining out or going for a hike with friends – things AI still struggles with.

I don’t know what long-term impact is; entirely new industries and jobs may likely emerge. But for now, we need to have real conversations about managing the transition that’s coming up, acknowledging both the power of AI and the enduring value of human intelligence and adaptability.